How Delta Lake ensures ACID Transactions

ACID is a critical feature in the bulk of the databases. Still, when it comes to HDFS or S3, generally, it is tough to give the same stability guarantees that ACID databases provide us. Delta Lake stores a transaction log to track all the commits done to the record directory to implement ACID transactions. Delta Lake architecture provides Serializable isolation levels to guarantee the data consistent crosswise many users.

Let’s discuss each element of ACID in Delta Lake

Atomicity

Delta Lake can guarantee atomicity by providing a transaction log where every fully completed operation is recorded, and if the operation was not successful it would not be recorded. This property can ensure that no data is partially written which can then result in inconsistent or corrupted data.

Consistency

With a serializable isolation of write, data is available for read and the user can see consistent data.

Isolation

Delta Lake allows for concurrent writes to table resulting in a delta table same as if all the write operations were done one after another (isolated).

Durability

Writing the data directly to a disk makes the data available even in case of a failure. With this Delta Lake also satisfies the durability property.

Atomicity and Consistency Issue in Spark

Spark

.range(1000)

.repartition(1)

.write.mode(“overwrite”).csv(“/data/example-1”)

This code will create a file in /data/example-1 directory with 1000 rows.

Now, we ‘ll create a second job to append more 500 rows/records but we ‘ll fail this job in middle

Spark

.range(500)

.repartition(1)

.map { i =>

if (i > 100) {

throw new RuntimeException(“Oops!”)

}

i

}

.write

.mode(“overwrite”)

.csv(“/data/example-1”)

After executing above code, you ‘ll see there is no data in /data/example-1 directory as new job failed and it removed existing data before writing new data. Spark write API in overwrite mode deletes an old file and then creates a new one. These two operations are not transactional. That means, in between, there is a time when data does not exist if overwrite operation fails, you are going to lose all your data.

How Delta Lake Fix Atomicity and Consistency Issue

Spark

.range(1000)

.repartition(1)

.write.format(“delta”)

.mode(“overwrite”).save(“/data/example-1”)

This code will create a parquet file in /data/example-1 directory with 1000 rows.

Now we ‘ll create another job with exception but using Delta Lake

Spark

.range(500)

.repartition (1)

.map { i =>

if (i > 100) {

throw new RuntimeException(“Oops!”)

}

i

}

.write

.format(“delta”)

.mode(“overwrite”)

.save(“/data/example-1”)

After executing above code, you ‘ll see although new job failed but you still have old data available another file with 1000 records. This is how Delta Lake ensure Atomicity and Consistency

How Delta Lake Implements Isolation and Durability

Apache Spark does not have a strict notion of a commit. There are task-level commits, and finally, a job-level commit in spark job. Delta Lake provides concurrency of commits by using optimistic concurrency control. The Delta Lake transaction log (also known as the Delta Log) is an ordered record of every transaction that has ever been performed on a Delta Lake table since its inception

When a job commit execution starts it takes the snapshot of current Delta log and on completion of commit action it checks Delta Log if it is updated by another process in the meantime, it updates the Delta Table and attempts again to register the commit.

If Delta log is not updated by another process, then it records the commit in the Delta Log

Durability means data should be available and can be restored in case of system failure.

In Delta Lake once any transaction is committed it is written on disk and ‘ll be available and can be restored in case any system failure. This process satisfies durability.

Asif Mughal

Asif is Head of Big Data at TenX with over 12 years of consulting experience

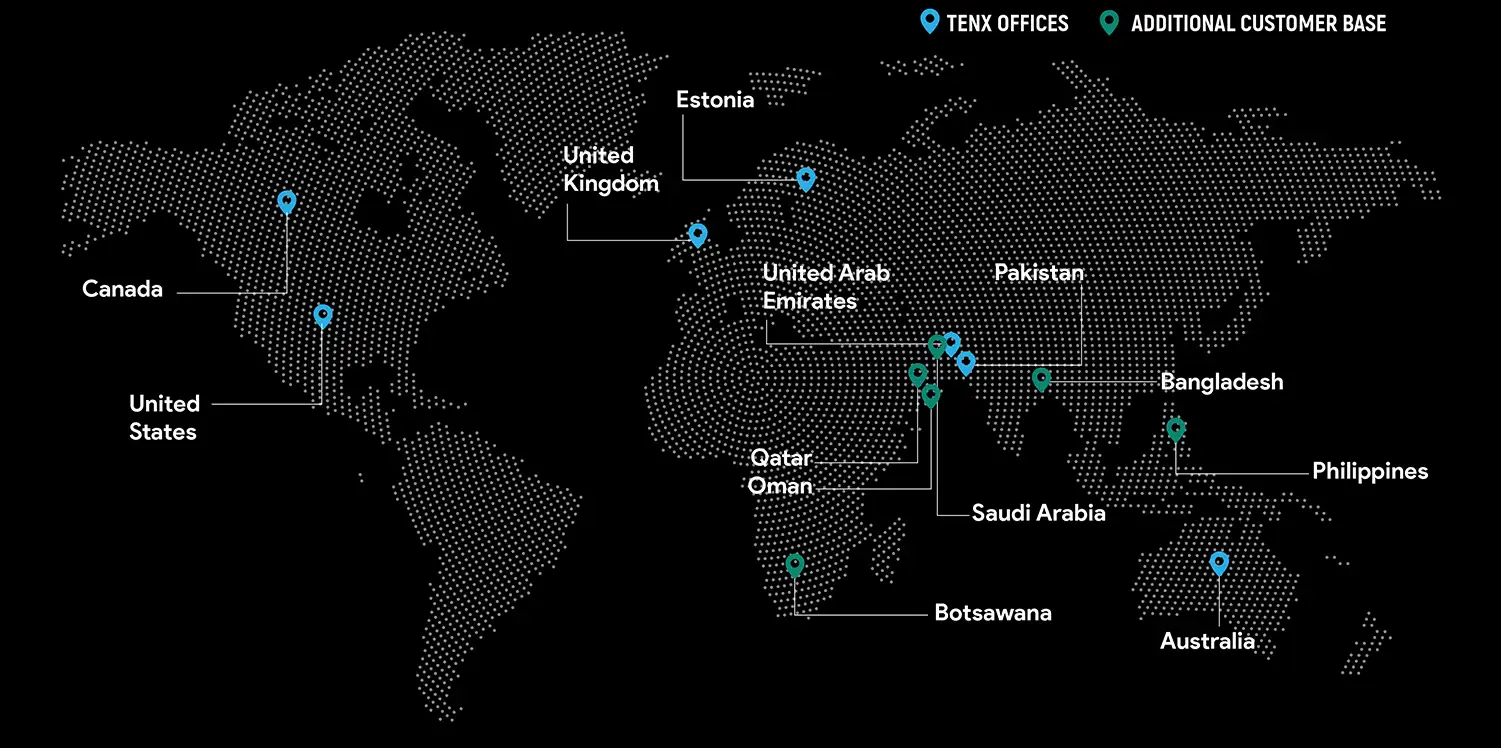

Global Presence

TenX drives innovation with AI consulting, blending data analytics, software engineering, and cloud services.

Ready to discuss your project?