What is Delta Lake?

Delta Lake is an open-source data storage layer that delivers reliability to data lakes. It implements ACID transactions, scalable metadata handling, and unifies the streaming and batch data processing. Delta Lake architecture runs on top of your current data lake and is fully cooperative with Apache Spark APIs

Why Delta Lake? Key Features of Delta Lake

Delta Lake offers ACID transactions via optimistic concurrency control between writes, snapshot isolation so that readers don’t see garbage data while someone is writing, data versioning and rollback, and schema enforcement to better handle schema changes and deal with data type changes.

ACID transactions on Spark

Serializable isolation levels guarantee that users never see variable data. In a typical Data Lake, several users would be accessing, i.e., Reading and writing the data in it, and the data integrity must be preserved. ACID is a critical feature in the bulk of the databases. Still, when it comes to HDFS or S3, generally, it is tough to give the same stability guarantees that ACID databases provide us. Delta Lake stores a transaction log to track all the commits done to the record directory to implement ACID transactions. Delta Lake architecture provides Serializable isolation levels to guarantee the data consistent crosswise many users.

Scalable metadata handling

Delta Lake treats metadata just like data, leveraging Spark’s distributed processing power to handle all its metadata. As a result, Delta Lake can handle petabyte-scale tables with billions of partitions and files at ease.

Open Format

All data in Delta Lake is stored in Apache Parquet format enabling Delta Lake to leverage the efficient compression and encoding schemes that are native to Parquet.

Schema Enforcement

Schema enforcement also is known as schema validation. Delta Lake architecture ensures data quality by matching data to be written on the table. If the data’s schema is not matching with the table’s schema, then it rejects the data. Like the front desk manager in some busy restaurant that only accepts reservations. Schema enforcement is a great tool. It works as a gatekeeper. It was enforced to those tables that directly feed to ML Algorithms, BI dashboards, Data Visualization Tools, and a production system that required strongly typed and semantic data.

Schema Evolution.

Schema evolution is a feature that allows users to change tables current Schema easily, mostly used when we are performing append or overwrite operations. It can be used at any time when you want to intend to change the table schema. After all, it is not hard to add a new column.

Time travel

Data versioning allows rollbacks, full past audit trails, and reproducible machine learning practices while using Delta Lake.

Updates and Deletes

Delta Lake architecture supports merge, update and delete operations to enable complex use cases like change-data-capture, slowly-changing-dimension (SCD) operations, streaming upserts, and so on.

Delta Lake Transaction Log

Delta lake transaction logs, also known as delta log. It is an ordered record of all transactions that have been performed on the delta lake table since its creation. Use of transaction log: 1. To allow various users to read and write to the given table at the same time. It shows the correct view of data to users. It tracks all changes that users make to the table. 2. it implements atomicity on delta lake, it keeps watching on transactions performed on your delta lake either complete fully or not complete at all. Delta lake offers a guarantee of atomicity with the help of a transaction mechanism. It also works as a single source of truth.

Conclusion

As the business issues and requirements grow over time, so does the structure of data. But, with the help of Delta Lake, incorporating new dimensions become easy as the data changes. Delta lakes are making data lakes’ performance better, more reliable, and easy to manage. Therefore, accelerate the quality of the data lake with a safe and scalable cloud service.

Databricks Delta and Delta Lake are different technologies. You need to pay for Databricks Delta whereas Delta Lake is free.

Asif Mughal

Asif is Head of Big Data at TenX with over 12 years of consulting experience

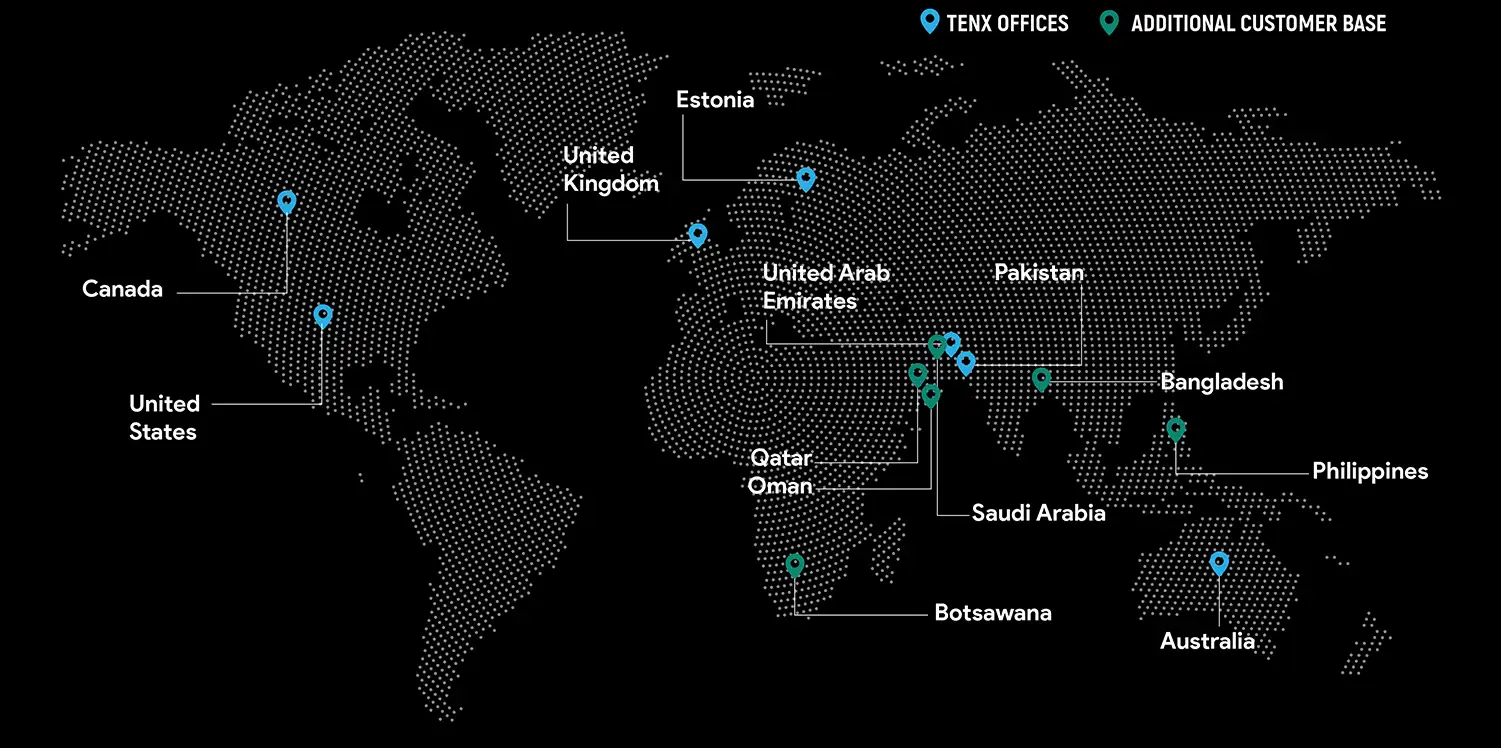

Global Presence

TenX drives innovation with AI consulting, blending data analytics, software engineering, and cloud services.

Ready to discuss your project?